The Perils of Open Weight AI Models

Analysis

By Sharath Kumar Kolipaka

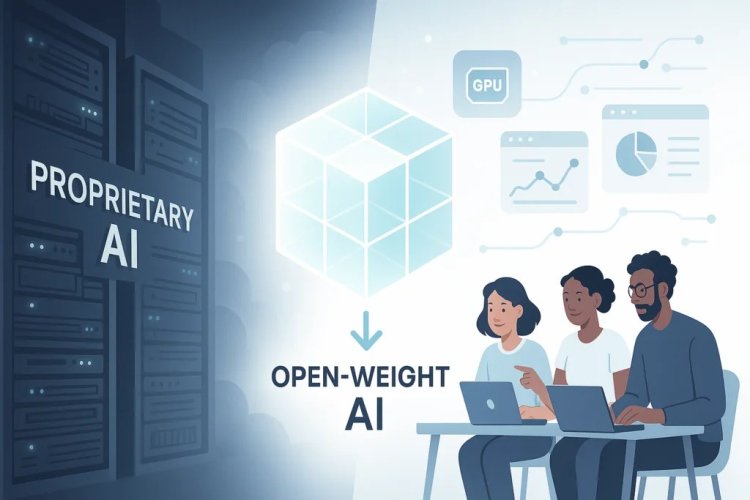

The last few years have been wonderful for the developments in AI. Many models left the regular people and developers in Awe with their abilities in multiple ways, from solving math equations to producing high-resolution pictures and videos based on prompts containing a few sentences. The AI assistants from futuristic movies are a reality now. If so, can we have one? The answer is yes, with the right resources, anyone can have their own AI thanks to the Open-Weight and open-source models released online. Which people can download and run on their own hardware and train with their own data sets for specific tasks. This is something that is already happening

It is relevant to know the distinction between these two categories. The open-weight models do not give training code and training data sets. In contrast, open-weight models—such as Llama, Mistral, Gemma, DeepSeek, and Falcon—release the final model weights and often the inference code, but importantly, they withhold the proprietary training data and training code. But Open source models are not the talk of the town now because there is no actual frontier model in the market right now, but for open weight, there are Llama, Mistral, Gemma, DeepSeek, Falcon and some more. Thus, due to this availability, they are creating real present-day concerns on many fronts.

The widespread availability of open-weight models has fueled a wave of legitimate organisations that are building successful businesses by specialising in specific parts of the AI value chain. Instead of shouldering the massive cost of training a frontier model, companies like Nous Research focus on fine-tuning models like Llama for superior conversational performance, while Perplexity AI integrates and fine-tunes these models with real-time web search for highly accurate, fact-checked answers. Simultaneously, "infrastructure unicorns" like Groq and Together AI have achieved popularity by solving the hard problem of deployment, offering custom hardware (like Groq's LPUs for near-instant inference) or premier cloud platforms for running and training the entire open ecosystem, effectively democratizing access to powerful AI technology.

But this availability also leads to some questionable and outright illegitimate usage of technology.

What happens when these models are used to do harm?

It’s an election year. Your social feeds are flooded with hundreds of thousands of voices pushing a specific agenda, spreading misinformation so fast that regulators can’t even make a dent in it. But here is the scary part—it might not be a grassroots movement of thousands of people. It could be one single entity with deep pockets. It can be one organisation running an AI model designed to act as a digital agent. This agent manages an army of accounts, flooding the internet with content so natural that your untrained eye would never spot the fake. It understands context. It replies to comments with strongly prepared talking points, backed by "facts" that look real until you dig five layers deep. They are trained to walk the line, being just toxic enough to influence people without triggering platform bans.

The sophistication is terrifying. They can create deepfake videos and audios in local dialects, hitting the exact cultural notes needed to persuade tiny slices of the population. They can execute hyper-personalised cognitive warfare by analysing a person's social media profile. By mimicking real citizens, these bot networks can manipulate micro-communities in ways which were not possible before.

Most dangerously, they are chameleons. The AI can adopt any persona—a middle-aged housewife concerned about prices one moment, an aggressive NRI businessman the next. They can target and insult groups without technically breaking platform rules, pushing products or ideologies while pretending to be just like you. This is an advanced political persuasion tool capable of debating users with prepared propaganda that is incredibly difficult to disprove in real-time.

While traditional propaganda tries to change what you think, cognitive warfare tries to change how you think. It targets the brain’s processing errors—our biases, fears, and inability to verify fast-moving data—to fracture trust and make societies destroy themselves from the inside out. The objective is to weaponise our own perception of reality until we can no longer distinguish friend from enemy.

The Missing Link: Operationalising the Threat. For years, this type of total warfare was limited by human speed and scale. AI models have removed those guardrails. They are the "missing link" that allows for automated, personalised mass manipulation. And we aren't just speculating—we are seeing it play out in real time across every major recent conflict.

- The United States: The "Phantom" Candidate. In the recent US election cycle, the theoretical threat became reality. Thousands of voters picked up their phones to hear President Biden's voice urging them not to vote. It wasn't him; it was an AI clone, deployed for pennies but requiring federal intervention to stop. Simultaneously, we saw the "Doppelganger" campaign—a Russian operation that didn't just write fake news, but used AI to clone the actual websites of major media outlets (like The Washington Post or Fox News), filling them with anti-Ukraine narratives that looked identical to real reporting.

- Russia-Ukraine: The First AI War Ukraine gave us the first terrifying glimpse of "deepfake diplomacy." Early in the invasion, a video aired on hacked TV stations showing President Zelenskyy standing at his podium, ordering his soldiers to lay down their arms and surrender. It was a deepfake. While clumsy by today's standards, it proved that the identity of a world leader could be hijacked to break the will of an army.

- Israel-Palestine: The "Liar's Dividend" In the Israel-Gaza conflict, the line between reality and fiction has evaporated. We witnessed the "All Eyes on Rafah" campaign—an AI-generated image that sanitised the war zone into endless, orderly tents—go viral, shared over 50 million times to shape global sentiment based on a scene that didn't exist. Conversely, we saw the "Liar's Dividend" in full effect: real, horrific images of charred infants were dismissed by millions as "AI fakes" because the public no longer trusts their own eyes. The weapon isn't just the lie; it's the total collapse of belief in the truth.

Major geopolitical players are industrialising the use of open AI models for state-sponsored "Influence Operations" (IO) to create a "synthetic consensus" by flooding social media with bots and making fringe opinions appear as the majority view. Notable examples include the Russia-linked "CopyCop" network, which uses AI to rewrite legitimate news articles with political bias for publication on fake sites, and the China-linked "Spamouflage" network, which generates infinite comments to harass critics and artificially amplify pro-China narratives, even employing AI to create sexually explicit deepfakes to silence female politicians and journalists.

Maybe the most dangerous threat is in the realm of cybersecurity. AI is removing the technical barrier to entry, giving state-of-the-art weapons to small teams. A tiny Advanced Persistent Threat (APT) cell no longer needs an army of elite hackers to cause global chaos; they just need the right model. It is a substantial force multiplier that makes sophisticated attacks cheap and instantly scalable. What used to take months of planning and millions of dollars can now be automated and unleashed on the world in seconds. The availability of open-weight models has led to the rise of "Crime-as-a-Service" startups on the dark web. These operations, exemplified by WormGPT, FraudGPT, and DarkBERT, fine-tune open models on criminal datasets to create sophisticated, subscription-based AI tools for cybercriminals. These tools are used to write highly convincing phishing emails, generate malware, and create undetectable scam pages, effectively lowering the barrier to entry for complex, large-scale attacks.

In the European Union and the United States, the approach is characterised by a tension between "safety" and "openness." The EU’s AI Act—the world’s first comprehensive AI law—technically offers an exemption for open-source models to support research, but this "safe harbor" is narrower than it appears; it disappears if the model poses a "systemic risk" (measured by compute power) or is part of a high-risk application, effectively forcing major open-weight developers to comply with heavy transparency and documentation rules. Conversely, the United States is currently leaning against strictly regulating open weights. The National Telecommunications and Information Administration (NTIA) recently advised against restricting open model weights, arguing that the current risks do not outweigh the benefits of innovation, though export controls are increasingly being used to prevent "dual-use" (military-capable) AI from reaching adversaries.

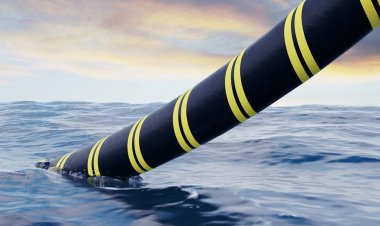

China is employing strict domestic control along with strategic global expansion. Domestically, China’s "Interim Measures" require all generative AI models, including open-source and open-weight models available to the public. This aligns with "core socialist values". These models also have to pass rigorous security assessments before release, effectively creating a "license to release" regime that makes true rapid open-source development difficult. However, internationally, Chinese entities are aggressively releasing powerful open-weight models (like the Qwen and DeepSeek series) to capture global market share and establish their technical standards in the Global South. This has turned open-weight models into a geopolitical football, where regulations are not just about safety, but about who controls the underlying infrastructure of the future digital economy.

The proliferation of open-weight models has fundamentally altered the security calculus. For India, the genie is out of the bottle. We cannot regulate these models out of existence without isolating ourselves from the global pace of innovation. These tools are critical for our vision of an AI-powered economy, enabling local solutions that Western tech giants will never build for us.

However, the "open door" brings a draft. The democratisation of high-end cyber and psychological warfare tools places immense pressure on India’s digital and social fabric. Whether it is protecting the integrity of our financial systems or the sanctity of our electoral process, the buffer time between a technological breakthrough and a security threat has vanished.

The priority for Indian policymakers must shift from "controlling access" to "building immunity." We need a dual-track approach: aggressively supporting the open-source ecosystem to ensure India remains a maker, not just a taker, of technology, while simultaneously treating disinformation defence and cyber-resilience as national security imperatives.

Disclaimer: This paper is the author's individual scholastic contribution and does not necessarily reflect the organization's viewpoint.

Sharath Kumar Kolipaka completed his master’s in Diplomacy, Law and Business from Jindal School of International Affairs, specializing in peace and conflict studies. He is a Research Fellow at The New Global Order.